iOrb - Unifying Command and 3D Input for Mobile Augmented Reality

Lab project in the area of Virtual and Augmented Reality.

Keywords: Studierstube, Mobile Computing, Augmented Reality.

About this Project

Input for mobile augmented reality systems is notoriously difficult. Three dimensional visualization would be ideally accompanied with 3D interaction, but accurate tracking technology usually relies on fixed infrastructure and is not suitable for mobile use. Command input is simpler but usually tied to devices that are not suitable for 3D interaction and therefore require an additional mapping. The standard mapping is to use image plane techniques relative to the user's view. We present a new concept - the iOrb - that combines simple 3D interaction for selection and dragging with a 2D analog input channel suitable for command input in mobile augmented reality applications.

This work is part of the Studierstube research project.

Additional Information

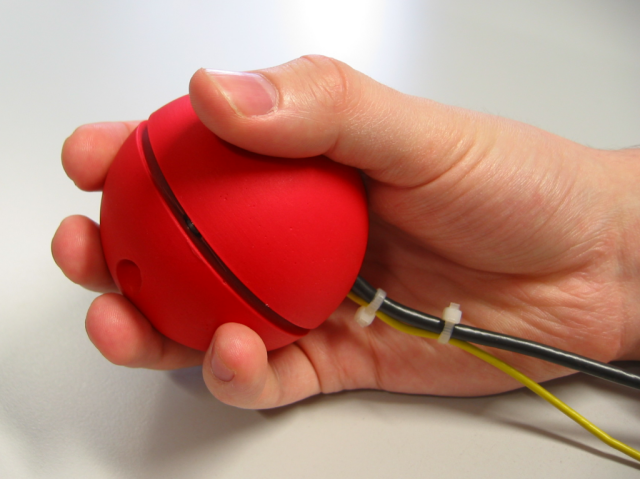

The iOrb consists of an off-the-shelf inertial tracker for measuring 3D orientation build into a convenient sphere-like case and a single button, activated by pressing the two half-spheres together (see Figure 1). The resulting device overcomes the limitation of 3D interaction being restricted to the user’s view. The measured orientation can be used together with the head position to create a second 6D pose that is partly independent from the view pose. The device pose is used for ray casting to allow view independent selection and interaction which can be beneficial for dragging and other manipulations.

Moreover, the iOrb can be reused as a 2D input device similar to a trackball. The 2D input is achieved by mapping the 3D orientation to a 2D parametrization. Together with a build in button a user can operate hierarchical menus and other widgets like sets of checkboxes or lists.

Principles of Operation

To use the iOrb as a 2D input device we need to define a 2D parametrization of the 3D orientation. Then the resulting two dimensional parameter vector can be used as input. One way to define a mapping of a 3D orientation to a 2D analog device is to decompose the full 3D rotation into two consecutive rotations around orthogonal axes. The two angles become the two input dimensions.

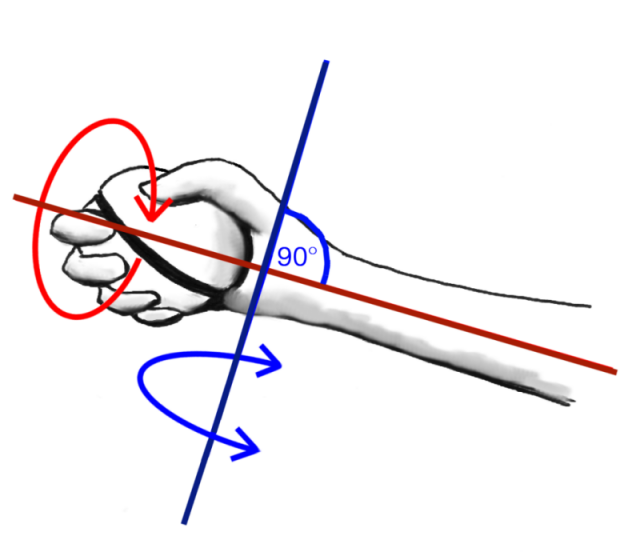

However, the choice of axes is crucial. Here the main idea is to sense a main axis from the current orientation of the user’s hand instead of using a fixed axis. The rotation axis for supination/pronation of the forearm (see Figure 2) is sensed during the first moments of the interaction to determine such a main axis. The assumption behind this choice is that such a movement is the simplest for the human hand and will therefore be used almost unconsciously and as the first intuitive movement of any interaction. Other movements induced by wrist flexion/extension or abduction/adduction require more awareness of the user.

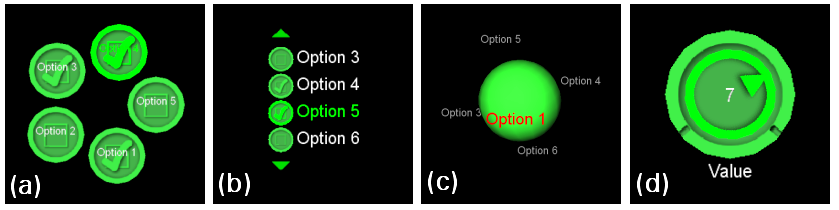

Widget library

In order to optimize the iOrb’s method of interaction, user interface widgets have to be adapted. Here we followed traditional 1D command widgets as used before in virtual environments [1, chapter 8]. We created widget types that are both familiar in style, yet are intuitively usable through a rotational parameter. This parameter is typically the primary axis rotation provided by the 2D parametrization mentioned above. A second dimension for interaction can also be added by utilizing the secondary axis rotation.

Publications

The iOrb was presented at the IEEE VR 2005 Workshop on New Directions in 3D User Interfaces. You can download the paper here.

Video

Watch a video of the iOrb in action here (WMV9 - 9MB) .

Downloads

| iorb.avi | 9.31 MB | AVI video | Download |