Natural User Interfaces for Mobile Mixed Reality

Lab project in the area of Virtual and Augmented Reality.

Keywords: Handheld Mixed Reality, 3D Interaction, Human Computer Interface, Natural User Interface, Interaction Methaphors, Gesture Recognition.

About this Project

This project combines a number of research activities to create intuitive ways to interact with 3D content in a mobile mixed reality environment. Our aim on these joint research approaches is to investigate novel concepts to create 3D natural user interfaces.

Additional Information

Our investigated approaches include:

- Creation of novel selection and manipulation techniques

- Evaluation of state of the art marker-less hand posture recognition approaches

- Development of markerless hand posture interaction for handheld mixed reality using RGB and depth data

3D Selection & Manipulation Techniques

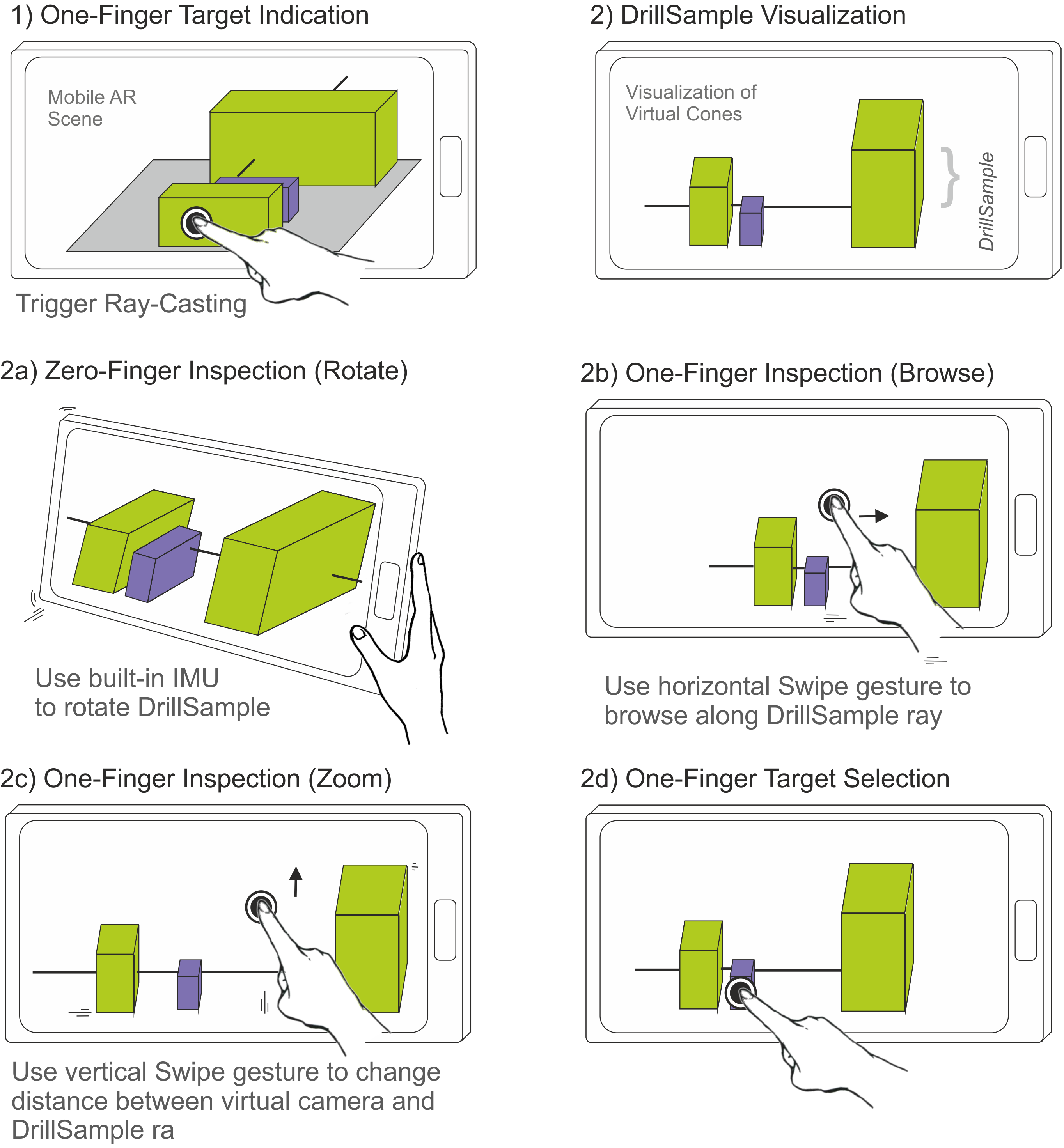

DrillSample: Precise Selection in Dense Handheld MR Environments

We introduce a novel selection technique DrillSample, which allow for accurate selection in a one-handed dense handheld AR environments. It requires only single touch input for selection and preserves the full original spatial context of the selected objects. This allows for disambiguating and selection of strongly occlud

ed objects or of objects with high similarity in visual appearance.

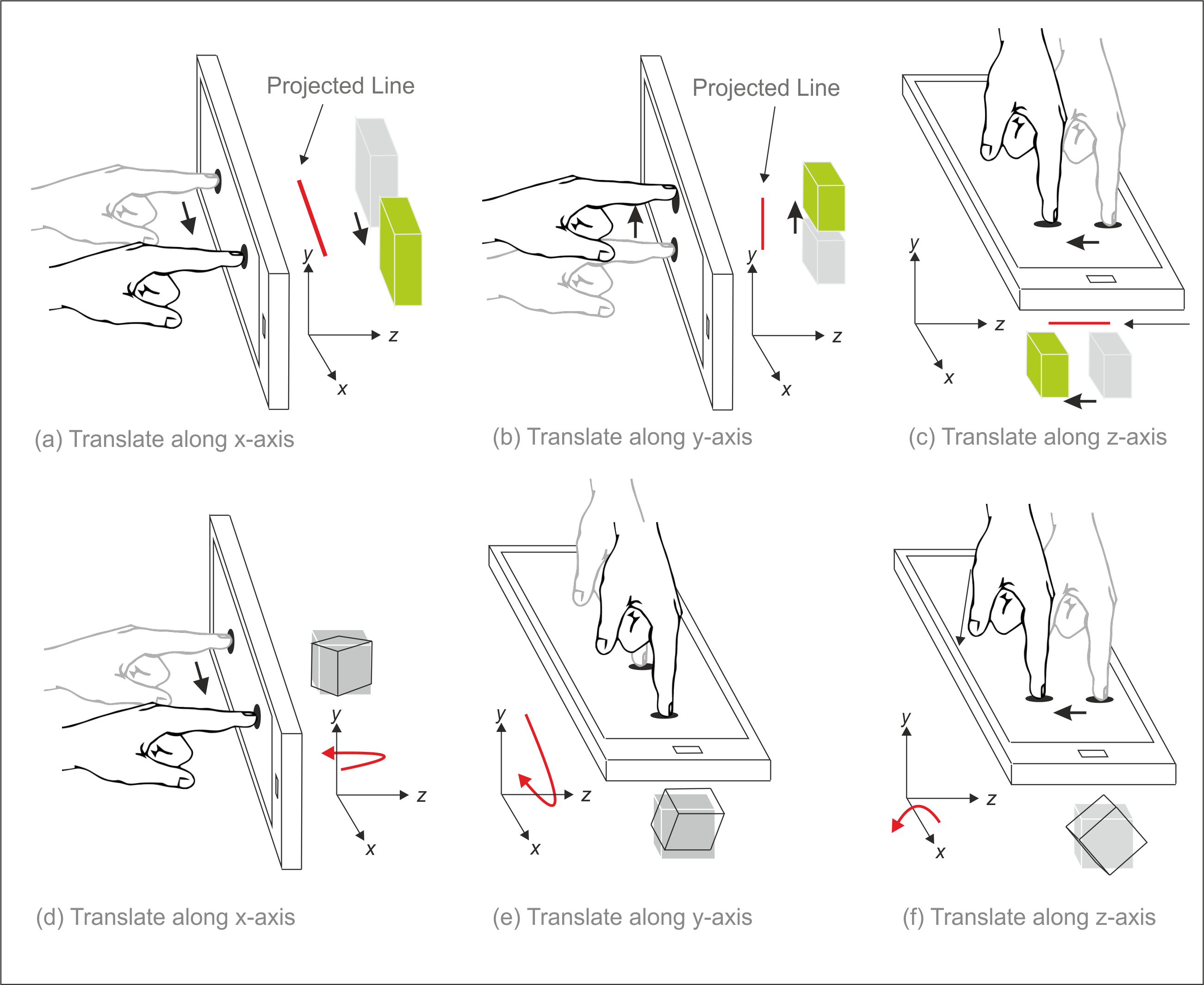

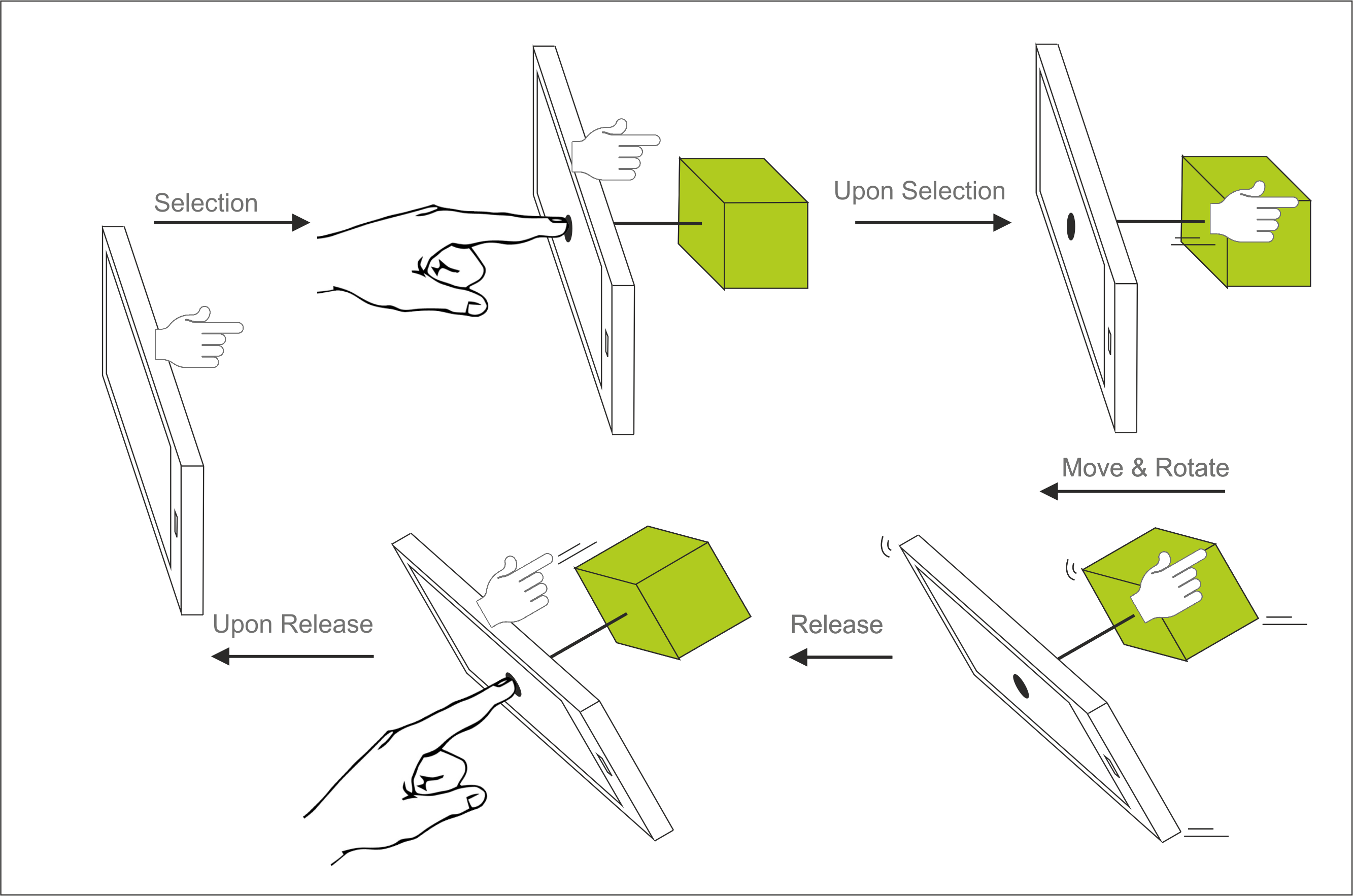

3DTouch & HOMER-S: Intuitive Manipulation for One-Handed Handheld MR

We introduce two novel, intuitive six degree-of-freedom (6DOF) manipulation techniques, 3DTouch and HOMER-S that provide full 3D transformations of a virtual object in a one-handed handheld augmented reality scene. While 3DTouch uses only very simple touch gestures and decomposes the degrees of freedom, Homer-S provides integral 6DOF transformations and is decoupled from screen input to overcome physical limitations.

| 3DTouch separated 6DOF manipulations | Homer-S integral 6DOF manipulation |

Natural User Interfaces using RGB+D Data

We performed an in-depth evaluation of state of the art hand posture recognition algorithms using RGB or RGB+D(epth) data. Afterward, we modified them to being applicable in a mobile mixed reality environment and to act as a mobile natural user interface (NUI) for markerless 3D interaction (selection, translation).

|

|

|

| The mobile NUI using RGBD data to recognize hand postures. |

Our test setup using an Android Asus TF201 Tablet, its front camera for RGB and a connetced Kinect for Windows for depth data capturing. |

![[PNG] NUI_RGBD.png](/projects/mobile-interaction/downloads/nui-rgbd.png)

![[PNG] NUI_testSetup.png](/projects/mobile-interaction/downloads/nui-testsetup.png)