Immersive Interaction in Streamed Dense 3D Surface Reconstructions

Lab project in the area of Virtual and Augmented Reality.

Keywords: Virtual Reality, Immersive, Selection, Navigation, Dense 3D Surface Reconstructions, Point Clouds, Perception, Occlusion Management.

About this Project

Within the project, we investigate novel methods to understand and interact with large dense 3D surface reconstructions while being immersive within them using virtual reality. The research covers streaming of large dense reconstruction to enable live exploration, navigation, automatic semantic understanding during live 3D reconstruction, and selection of (occluded) reconstruction patches.

Streaming & Exploration of Dense 3D Surface Reconstructions in Immersive VR

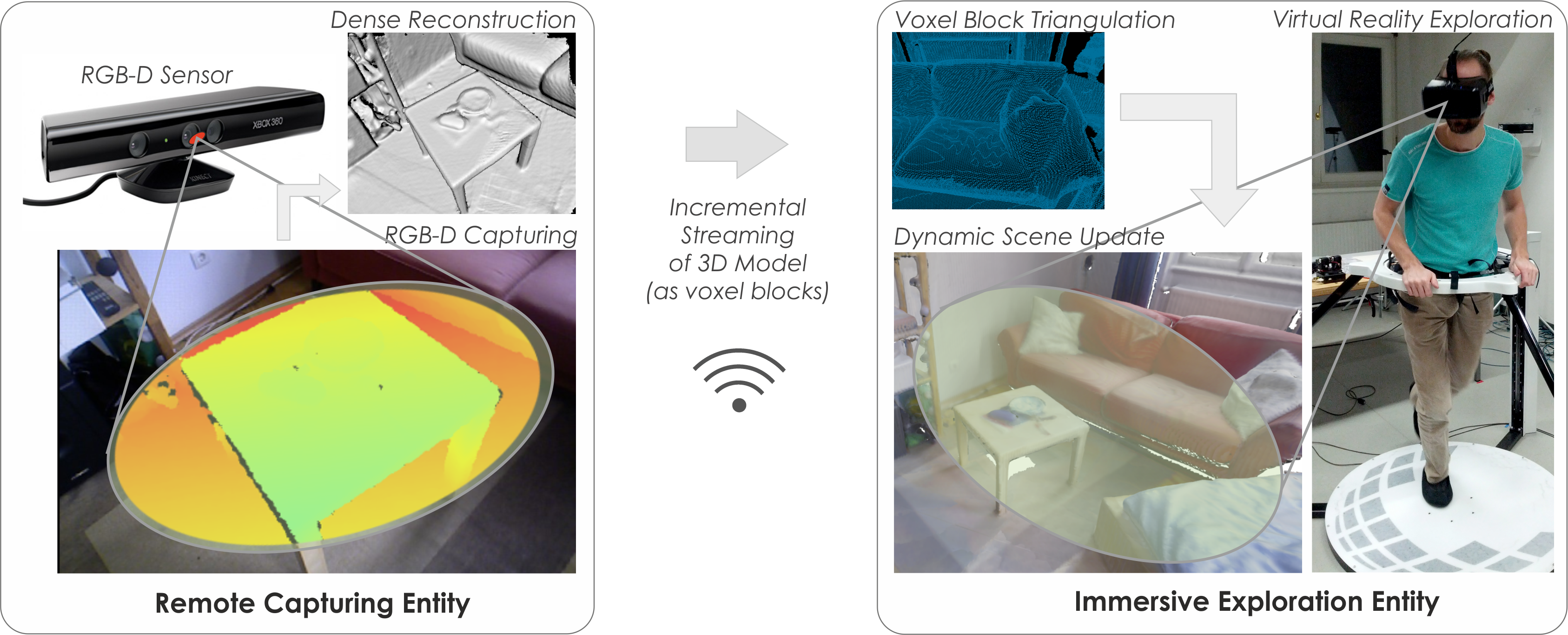

We introduce a novel framework that enables large-scale dense 3D scene reconstruction, data streaming over the network and immersive exploration of the reconstructed environment using virtual reality. The system is operated by two remote entities, where one entity - for instance an autonomous aerial vehicle - captures and reconstructs the environment as well as transmits the data to another entity - such as human observer - that can immersively explore the 3D scene, decoupled from the view of the capturing entity. The performance evaluation revealed the framework´s capabilities to perform RGB-D data capturing, dense 3D reconstruction, streaming and dynamic scene updating in real time for indoor environments up to a size of 100m2, using either a state-of-the-art mobile computer or a workstation. Thereby, our work provides a foundation for enabling immersive exploration of remotely captured and incrementally reconstructed dense 3D scenes, which has not shown before and opens up new research aspects in future. Please find all details in our ISMAR 2016 publication.

System Overview

Video

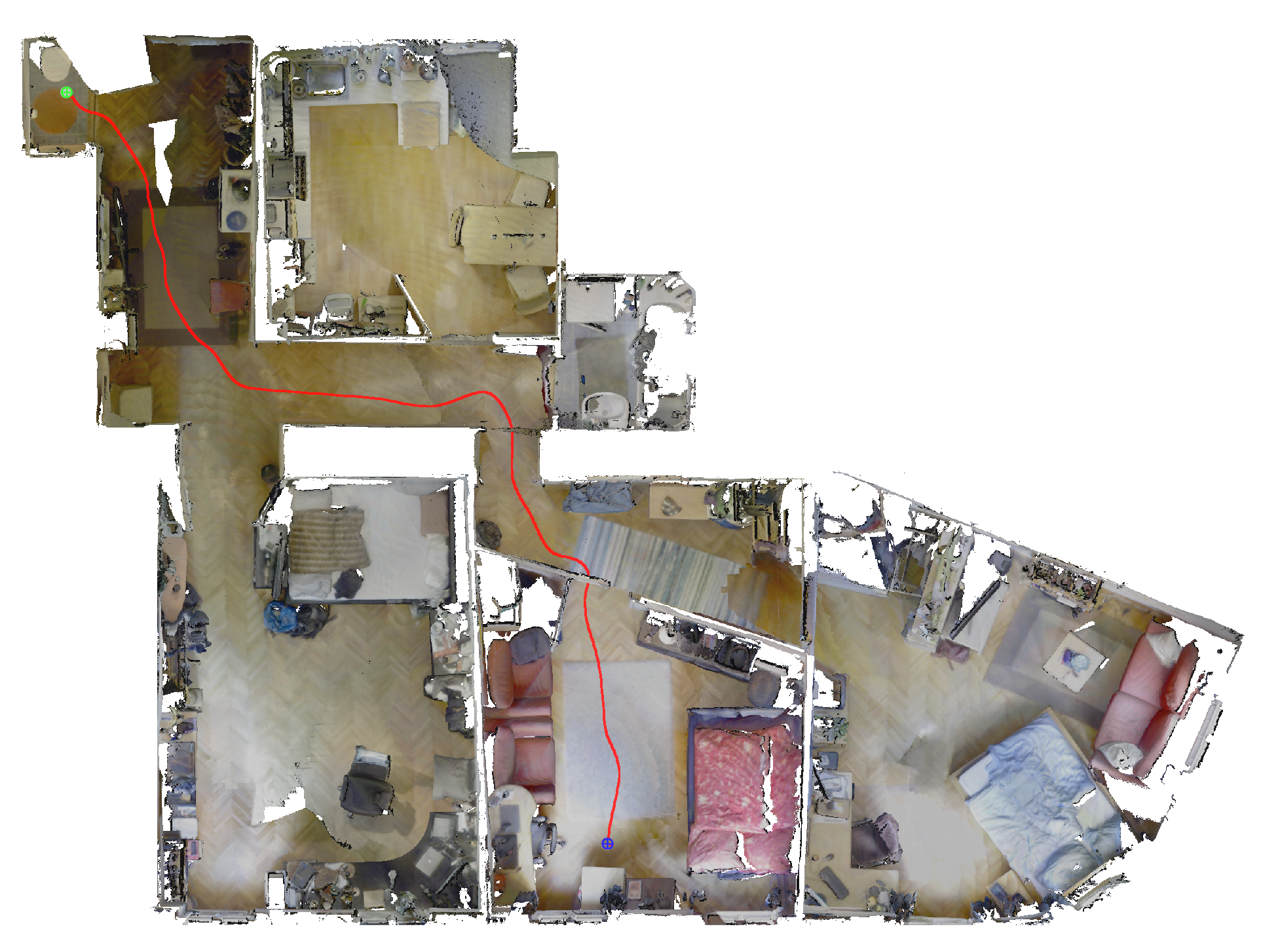

Data Set - Viennese Flat

Specs

For testing, we employed precomputed camera trajectories using [Choi et al.] to stabilize the camera pose estimation and ensure a well aligned model. [Download] [Citation] |

Large Scale Cut Plane: An Occlusion Management Technique for Immersive Dense 3D Reconstructions

![[JPG] cutplane.jpg](/projects/immersivepointclouds/downloads/cutplane.jpg)

We introduce a novel 3D selection technique, particularly designed for large scale dense 3D reconstructions in which users are immersed in. It provides users with means to select user-defined parts (patches) of the reconstruction for later usage despite inter-object occlusions which is inherent to large dense 3D reconstructions, due to scene geometry or reconstruction artifacts, Large Scale Cut Plane that enables segmentation and subsequent selection of visible, partly or fully occluded patches within a large 3D reconstruction, even at far distance. We combine Large Scale Cut Plane with an immersive virtual reality setup to foster 3D scene understanding and natural user interactions.

System Overview

Results

In our VRST 2016 publication, we furthermore present results from a user study where we investigate performance and usability of our proposed technique compared to a baseline technique. Our results indicate Large Scale Cut Plane to be superior in terms of speed and precision, while we found need of improvement of the user interface. In the following figure, distance traveled and number of interaction steps are depicted, which were required to fulfill the task with either LargeScaleCutPlane or the baseline technique Raycast.

![[PNG] teaser_small.png](/projects/immersivepointclouds/downloads/teaser-small.png)

![[PNG] comparison_small.png](/projects/immersivepointclouds/downloads/comparison-small.png)